Spatial Audio: The Continuing Evolution

Written by Milou Derksen (Edits: Dennis Beentjes, Robin Reumers)

Let’s dive into the world of Spatial Audio!

“But wait!” you might ask, “what do you mean by Spatial Audio? Are you talking about Immersive Audio, 3D Sound, Surround Sound, Binaural, Auro-3D, Dolby Atmos, 360-sound…?” Well, yes, sort of. In principle, we are talking about anything related to ‘sound beyond stereo’, which is not an entirely new thing on its own. However, due to the rapid developments in audio (and music) for VR and major platforms like Facebook and Youtube fully implementing Spatial Audio technology and offering free tools for anyone interested in experimenting with it, it’s more relevant than ever before.

Ok, we hope we have answered your first question. However, you might ask yourself another one: “Is this something for me as a music producer?” That is certainly a good question. With this article, we want to give you a headstart finding the answer. We explore the different areas and technologies related to Spatial Audio, including resources for you to explore further. Below is what we are covering in this article:

Now let’s start!

Immersive audio, 3D sound, surround, binaural, Auro-3D, Dolby Atmos, object-based audio, 360-sound, spatial audio. At this point, you have probably seen a variety of different terms and multiple opinions regarding sound beyond stereo. It has become a bit of a buzz since platforms like Facebook and Youtube allow you to get involved in the 3D world. Perfect timing to dig a little deeper into this fascinating subject!

It’s all around us

You have probably already sat in a cinema room with 3D audio, with speakers everywhere and sounds coming from all around you. And most likely you agree with George Lucas, who said that sound is 50% of the movie-going experience. Without sound, a movie does not have the same impact and experience.

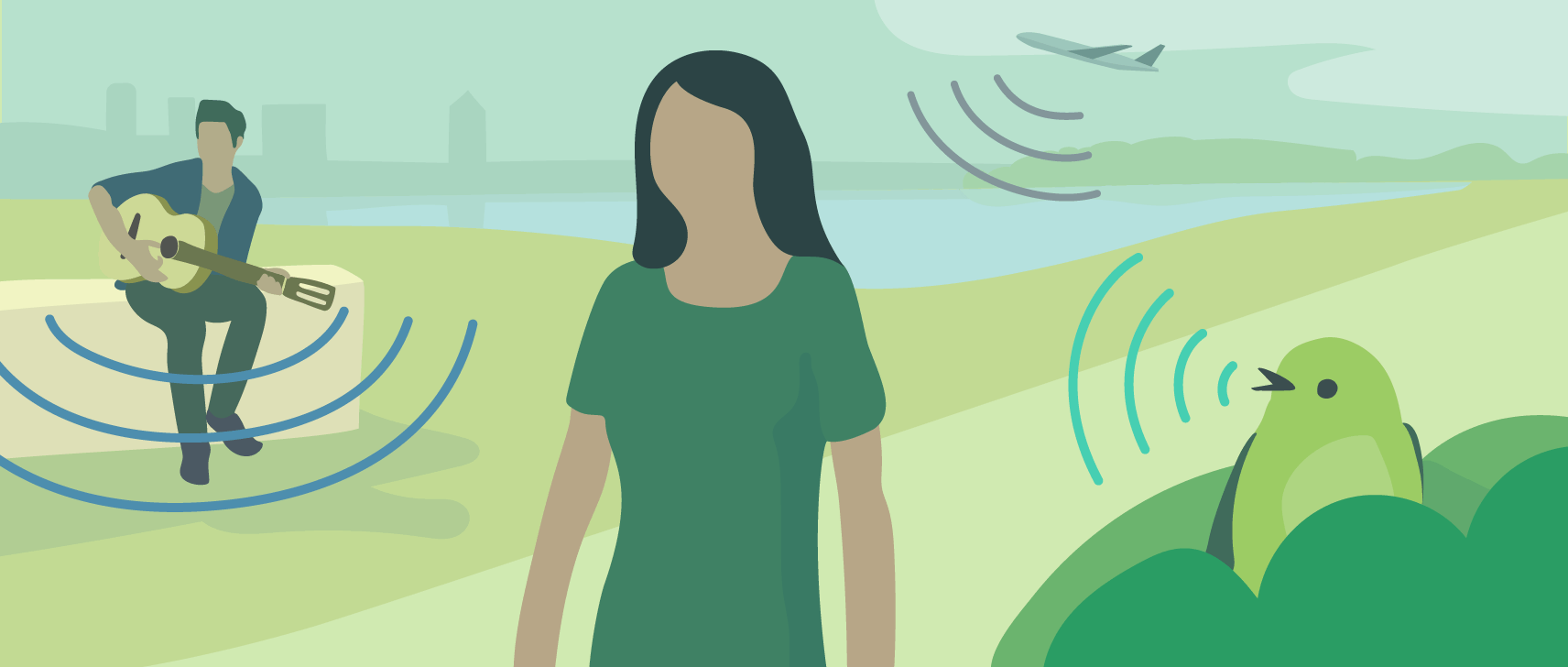

Most of us mostly listen to music or sound in stereo, which only gives us sound coming from 1 dimension: front left to right. Surround sound brought us a second dimension and with the introduction of 3D or immersive sound, you can now have a sense of sound sources coming from all around you. With Virtual Reality as a rapidly emerging technology, 3-dimensional audio is getting more attention than ever. Convincing VR needs audio that convincingly places sounds in a 3-dimensional space so that the user perceives the sound as coming from the real physical objects, in their VR experience. In other words, making it so similar to what we hear in real life.

Also in the music industry, immersive audio is making its way (back) and we are excitedly riding this wave. Not long ago, we teamed up with successful DJ, producer and remixer Ferry Corsten’s and sound engineer Ronald Prent. Ferry always had a deep urge to create an album that will give the listener a full musical experience and that became reality. Together with Ronald Prent, he turned his album “Blueprint” into a full 9.1 immersive music journey, right here at Abbey Road Institute Amsterdam. Read more about it here: https://abbeyroadinstitute.nl/blog/addicted-immersive-sound/

While we love adding more and more speakers all around us, one can argue that we as humans listen with 2 microphones, namely our ears. This is what brings us to spatial audio through headphones. It seems entirely possible to create a full 3D space/experience by using only headphones. Considering it’s now possible to create and record 3D sounds yourself, we wanted to take a closer look at how you can approach this. But first, let’s start with a bit of history.

Where it all began

It might all sound new and innovative but make no mistake. Binaural audio was already invented in the late 19th Century, a time when amplifiers did not even exist yet. The only way to listen to electrical audio signals was through a telephone receiving earpiece, and every radio came with a pair of headphones until the mid-1920s.

But as you can guess, the introduction of the moving-coil loudspeaker changed all that, paving the way for stereophonic sound. Binaural audio remained untouched for years until artists like Lou Reed and even The Rolling Stones started experimenting with it. Once artists started to play around with placement and accounting for the space between your ears, they found out how to make the audience feel like they were really present in the recording space.

In 1972 German company Neumann unveils the KU-80 — the first commercial head-based binaural recording system — at the International Radio and Television Exhibition in Berlin. Similar dummy heads developed by Sony, JVC, and Sennheiser soon followed, providing creators with the tools they need to invent new ways of recording sound and music. Later in the ’70s, Lou Reed and German sound engineer Manfred Schunk made Street Hassle — the first commercial pop album recorded in binaural audio — then follows that up with two more binaural albums, 1978’s Live: Take No Prisoners and 1979’s The Bells. (Check out “the best albums recorded in binaural audio”: https://hookeaudio.com/blog/music/best-binaural-albums/)

However, binaural slid into the background due to the expensive, specialised equipment required for quality recordings, and the requirement of headphones for proper reproduction. The types of things that could be recorded did not have a typically high market value. Still, a selected group of binaural enthusiasts kept it alive and worked on improving the technology.

But binaural recording techniques have undergone a revival. Due to increasing technological advances in the field of audio for VR and games and the commercial interest in 360° audio technology, binaural recording and reproduction have everyone’s attention again. Luckily the development did not stand still.

The modern era has seen a resurgence of interest in binaural, partially due to the widespread availability of headphones, cheaper methods of recording and the general increased commercial interest in 360° audio technology. Now we crave immersive experiences, and creators in every sector (VR, music, podcasts, gaming) are tapping the limitless potential of binaural.

Definition of Spatial Audio

Spatial Audio is any audio which gives you a sense of space beyond conventional stereo, allowing the user to pinpoint where a sound is coming from, whether this is above, below or a full 360 degrees around you. Stereo allows you to hear things in the front left and right, but you can’t get a sense of surround, height or sounds from below you. With the introduction of the 3rd dimension, you have a sense of the exact location of sound sources from all around. source

Before we look into the recording part, let’s talk a bit about the reproduction first:

How do you listen to Spatial Audio

Listening to spatial audio can be done in numerous ways, by using either speakers or headphones. Both can deliver enhanced listening but are usually used for different purposes of listening. Let’s look at 4 currently available possibilities.

1. Multiple speakers

One way to create a spatial soundscape is to place multiple speakers in a space. When listening to a movie soundtrack or piece of music through a surround sound system, individual elements can be panned to any location along the same flat plane as the listener’s head. Dialogue, music, and sound effects can seem to emanate from the speakers or anywhere in between. We’ve all experienced a well-mixed soundtrack. Surround sound works and after 2010, Auro Technologies, Dolby, DTS, and have each added height channels to their theatre sound systems, turning it into sound coming from all 3 directions. Individual speakers (sometimes even up to 128 of them) work well for dedicated fixed rooms, like a cinema, or a serious home cinema. It might not be for your average living room though.

2. Soundbars or stereo speakers using crosstalk cancellation

For the money, a smart sound bar might be just what many home theatre enthusiasts need, those small linear arrays of speakers typically tucked under a television monitor. Newer models already claim to give you a full 3D experience, projecting sound all around you. These models typically use a technique based on crosstalk cancellation.

Crosstalk cancellation plays an important role in displaying binaural signals with loudspeakers. It aims to reproduce binaural signals at a listener’s ears via inverting acoustic transfer paths. In simple words, it aims to cancel out sounds, coming to the right speaker into your left ear and sounds coming from the left speaker coming into your right ear. This crosstalk cancellation filter should be updated in real time according to the head position, and hence requires head tracking to function optimally.

It is a space that is seeing a lot of innovation and we are likely to see more breakthroughs in the next few years. This works well for spaces where it is impossible to add speakers all around the space, such as a typical living room.

3. Headphones using static binaural mixes

A static binaural mix presents the listener with a fixed soundfield. Binaural attempts to simulate 3D audio on headphones, by modifying sound as if it had travelled around your head and past your ears. This gives the mixing engineer an extremely high level of control over the experience, as the listener has no input into the system. This also allows the mixing engineer to approach 3D audio mixing with a very similar workflow to traditional stereo mixing applications.

4. Headphones using a combination of head tracking and head-locked audio

Binaural sound on its own, however, often does not sound real, partly because it does not change when you turn your head. But then there is the possibility of head-tracking. The position and orientation of the listener’s head are tracked, for example, using optical camera methods or gyroscopic sensors. Binaural rendering can integrate the movements of the listener. This means the rendering can be updated as a function of the listener’s head rotation and position.

So let’s say that you have got your Ambisonics mic positioned just right, your individual objects moving in perfect sync with the action on screen, but the producer wants to add some background music. You load a slave plugin on a stereo music track and it appears in the master plugin as it should. When you turn the user head position however, the music gets spatialised with that motion. What if you do not want this, but want to keep the stereo music locked so that it stays put regardless of user interaction? You can also add head-locked stereo audio to your videos, which does not change when a viewer moves their head. The head-locked stereo audio track is often used for narration or background music.

Experience head tracking on your smartphone:

How does it work?

The main cues are “binaural” in nature, stemming from the physical separation between our two ears. When a sound source is off-centre, there is a subtle time delay between the signal reaching the nearer ear and the further one. This “Interaural Time Difference” (or ITD) introduces a wavelength-dependent phase difference that the brain instantly interprets as a cue for direction. The further to the right a source is, the larger the delay between the sound reaching the right ear compared to the left. Phase differences become harder to detect at higher frequencies, so this cue is most effective below around 1-1.5 kHz.

But in addition to this simple time difference, the physical mass of your head absorbs sound energy as waves pass through from the ear nearest the source to the far ear. Conveniently, this shadowing effect—the “Interaural Level Difference” (ILD)—operates mainly for high frequencies, which are absorbed more than lower frequencies that tend to diffract.

3 main approaches to spatial audio

As immersive video and audio formats grow in popularity, audio engineers have been experimenting with techniques on how to record high-quality spatial audio. So have the engineers at our mothership Abbey Road Studios. As part of their mission under the head of audio products Mirek Stiles, they have set up The Abbey Road Spatial Audio Forum. Alongside practical experiments and academic projects, they aim to help artists navigate the space and deliver the best possible experience.

There are three types of audio we need to consider when it comes to recording and mixing spatial audio: Channel, Object and Ambisonic. These are not mutually exclusive and in some cases, all three are used together.

Channel based is when the production framework is tied into the reproduction format. Various sound sources are mixed in a DAW to create a final channel-based mix, usually for a specific target loudspeaker layout. However, the downside of channel based is that each audio channel in the final product has to be reproduced by a loudspeaker at a well-defined position. This fixed audio mix is transmitted to the end-user with basically no means to adapt it to their needs, which may be a specific playback device or their personal preferences.

Object-based production approach is able to overcome the above-mentioned obstacle of channel based. Object-based is encoding each of your audio sources independently with positional metadata and letting the renderer at the reproduction side position the audio as best it can to the desired location. At the point of consumption, these objects can be assembled to create an overall user experience and can be flexible and responsive to the user, environmental and platform-specific factors. In other words, this format can reproduce audio from mono right through to a full 360 sphere.

Ambisonic is the full 360-degree sphere and can be captured from a single point via ambisonic microphones or created artificially in post-production. Ambisonics comes in two different flavours: FOA (First Order) and HOA (Higher Order). FOA consists of four channels = Omni, left and Right, Front and back, Up and down. HOA means more channels; more channels technically equate to increased spatial resolution and more resolution means better localisation.

Recording techniques

As we now have a better understanding on the reproduction side of spatial audio, let’s have a closer look at the recording side…

1. Dummy head

The good old dummy head – or affectionately known by us as “Fritz”- is a method of recording used to generate binaural recordings, allowing for the listener to hear from the dummy’s perspective on headphones.

“It resembles the human head and has two microphone capsules built into the ears”

This purely binaural recording technique uses two omnidirectional microphones placed in the ears the dummy head and torso. This two-channel system emulates the human perception of sound and will provide the recording with important aural information about the distance and the direction of the sound sources. Dummy head recordings give a much more immediate experience because they transfer the listener into the environment the acoustic event originally took place.

Required to be played back on headphones, binaural stereo offers the listener the experience of a spherical sound image, where all the sound sources are reproduced with correct spherical direction. However, the problems that arise are first that each human head has different shaped and sized features. Secondly, although you can hear in every direction, the audio is not responsive to user input. So, if you move your head, the audio doesn’t change accordingly. The industry refers to this as “head-locked” audio, but we will get more into that below.

2. Ambisonic microphones

Ambisonics is a multi-channel technology that lets you spherically capture the sound arriving from all directions, at a single point in space. This can be achieved via dedicated microphones known as ‘Ambisonic’ models, such as Sennheiser’s AMBEO, and microphones by Soundfield, MH Acoustics and Core Sound. These are uniquely designed microphones that house not only one but four sub-cardioid microphones pointed in different directions. This unusual arrangement of microphone capsules is known as a tetrahedral array. The microphones deliver first order Ambisonics A-format, which needs to be converted into B-format. This conversion is a crucial part of the functionality and sound of the microphone.

W – a pressure signal corresponding to the output from an omnidirectional microphone

X – the front-to-back directional information, a forward-pointing velocity or “figure-of-eight” microphone

Y – the left-to-right directional information, a leftward-pointing “figure-of-eight” microphone

Z – the up-to-down directional information, an upward-pointing “figure-of-eight” microphone

A-Format and B-Format are two standards in the ambisonics workflow to control. A-Format is the raw audio from an ambisonic microphone; one channel of audio for each capsule. B-Format is a standardized, multi-channel audio format for ambisonic audio. Different models of ambisonic microphones must have their raw A-Format recordings converted to B-Format to be compatible in post-production and for final delivery to content platforms like Facebook or YouTube. You can do that by downloading and installing the dedicated converter and load it into your preferred Digital Audio Workstation.

Ambisonics is supported by all of the major post-production and playback tools on the market today. This makes ambisonics the appropriate tool for Virtual Reality and all other applications involving 3D sound. It also is future proof, as the raw Ambisonic format can be extracted into various formats at later dates.

3. Microphone array

Not planning on buying one of these unique Ambisonic microphones? It requires a bit more expertise, but you can also use mono microphones in spatial multi-channel microphone array, such as Hamasaki Square or Equal Segment Microphone Array. That way you record a sound source and do the panning via ambisonic/object software to place it in a 3rd-dimensional sound field. How to use these techniques is perfectly explained by Mirek Stiles in his article.

4. Point source recording

An alternative to using multiple microphones to record a sound source is to record it with a single mono microphone and then use the traditional pan pot to pan it in the sound field. This can be done using any technique, for example, you can pan it in a full 3D speaker setup, such as Auro-3D or Dolby Atmos, or turn it into an Ambisonic track layout in your DAW and pan it using an Ambisonics panner. This is of course very useful for point sources.

Tools

One of the most important and commonly asked questions in the field of Spatial Audio is “Where do I start?” Well, nowadays it is quite accessible to get involved with Spatial Audio. Thanks to software by Facebook, G-Audio, Blueripple and AudioEase (to name just a few) you now have access to create. All spatial audio theory, concepts and applications are made very easy to perform alongside our existing DAW production environments. Below some available and efficient tools explained.

G’Audio

As for software, G’Audio Lab has produced a free and very intuitive spatial plugin called “Works” that integrates seamlessly with 360 videos within Pro Tools. Works use an “edit as you watch” approach to spatialization, where all of your object panning can be done from a single plugin window. It has a simplified plugin structure comprised of slave plugins instantiated on any track you want spatial control over and a single master plugin where you can edit these tracks with a Quicktime movie. Using the Pro Tools video engine, you can load a mono or stereoscopic Quicktime movie and visually see where your sounds should go (click on the individual files in the gallery below to see how the plugin and GUI interact).

Facebook

The Facebook 360 Spatial Workstation is a software suite especially for designing spatial audio for 360 video and cinematic VR. It includes plugins for popular audio workstations, like the FB360 Spatialiser – which is effectively a pan pot – and the FB360 Control plugin – which is your headphone monitor output. It also includes a time synchronized 360 video player and utilities to help design and publish spatial audio in a variety of formats. It is free and is provided in both AXX and VST formats. You will need a DAW capable of handling 16 wide channels, as the FB software is 3rd Order Ambisonics Pro Tools, Reaper and Nuendo can do this.

You can download the free software and experiment yourself: https://facebook360.fb.com/spatial-workstation/

Blue Ripple

At Blue Ripple, they have some practical high-resolution 3D audio tools for the studio. Their free O3A Core plugin library provides the essential tools you need to get started with 3D mixes, including proper panning, visualisation, spatial manipulations and reverb. Not all hosts can cope with these plugins yet, they particularly recommend Pro Tools Ultimate or Reaper.

AudioEase

If you are already a bit further in 3D audio, you might want to have a look at AudioEase’s 360pan suite. This plug-in suite is not free though but does give you the professional tools. It, of course, includes 360 panning and the distancing of sound sources; a monitor to look around and listen to your immersive mix while you are making it. But also a convolution reverb with ambisonics impulse responses; a radar shows you the location of your audio in your ambisonics mix; you can rotate or tilt an ambisonics recording so that misalignment and calibration errors of the microphone can be easily corrected. You can do all your panning, distancing and even mixing from within the video window of your DAW (Reaper or Pro Tools HD/Ultimate). There is no need to have any plug-in interfaces open while working.

Mirek Stiles, Abbey Road’s Head of Audio Products gives some tips to get started yourself: https://www.abbeyroad.com/news/getting-started-with-spatial-audio-2399

“When people ask me how do I get involved with Spatial Audio my advice is to download the Facebook 360 software and just get stuck in. It’s free and is provided in both AXX and VST formats. You’ll need a DAW capable of handling 16 wide channels, as the FB software is 3rd Order Ambisonics Pro Tools, Reaper and Neundo can do this.”

– Mirek Stiles, Abbey Road Studios and Spatial Audio Forum

Encoding

When you have your audio (and video) file ready to go, you are just one step away from being able to upload it to Facebook and/or Youtube. It needs to be encoded first, but also that has been made quite easy. The Facebook 360 Spatial Workstation tools come with an Encoder. Simply point the Encoder to the 360 videos and the audio and you will get a complete video file with spatial audio that is ready for publishing on Facebook. The Facebook 360 Encoder is also able to export videos in a YouTube supported format.

However, to prepare 360 videos Specifically for Youtube, you can best use The Spatial Media Metadata Injector. This encoder adds metadata to a video file indicating that the file contains 360 videos. You can download the Spatial Media Metadata Injector from the Google Spatial Media GitHub page.

“When you publish your first few videos with spatial audio, don’t forget to remind your viewers to check out the experience on iOS, Android, Chrome on Desktop, or Gear VR with their headphones!” – Facebook

Conclusion

Spatial Audio has come a long way. Even though the development never stood still, it was often considered as a complicated concept and there wasn’t a persuasive reason for people to consider adopting it in commonplace mixing and processing tools. Until now. The ease of signal processing and the extensive interfaces in DAW’s, makes it accessible to everyone willing to experiment with Spatial Audio. And by making the technology and associated tools highly accessible, it opened up a gate to a wider audience.

Is it the future? We believe that Spatial Audio is no longer the future, it is now. Both for sound design and music. And we are not alone in this belief. Abbey Road Studios, under the supervision of Mirek Stiles, initiated the Spatial Audio Forum, where you will find great articles about this topic. Link

From their website: “Here at Abbey Road Studios, the home of audio innovation and the birthplace of stereo, we’re attempting to demystify the 3rd dimension of sound and lead the way into a fully immersive, spatial audio experience for listeners. As part of our mission, we have set up The Abbey Road Spatial Audio Forum which, alongside practical experiments and academic projects, aims to help artists navigate the space and deliver the best possible experience.”

And to answer the second question in our introduction “Is [Spatial Audio] something for me as a music producer?”, we want to refer to the interview with film, television and game composer Stephan Barton (Titanfall, Call of Duty, 12 Monkeys, Unlocked, Cirque du Soleil 3D, Jennifer’s Body, and many others) on the Spatial Audio Forum.

When asked what Spatial Audio means to him, Stephan Barton replied: ”The word revolutionary is thrown about very frequently in audio, and often without much justification – but it is justified here. When spatial audio is done well, it’s like the walls of the listening space, or the headphones, drop away completely – and the emotional effect of that is absurdly powerful. We all understand space in music, but we know it largely subconsciously, as one component of why most music is more powerful live, for example. As a composer and music producer, I can transport the listener not just to hearing a performance, but to hearing a place and a time, be it a realistic acoustic space or something much much more than that. The emotional power of that for both recorded music and live broadcast is impossible to describe.“ source

As you can see, there is still a lot to explore! We hope to have sparked your curiosity to give it a try yourself. We are certainly excited and we’ll be sharing more Spatial Audio content on our Facebook Page to keep you in the loop. And we highly recommend you to check the Spatial Audio Forum for more regular updates.

We are trying to tell stories in our music. Don’t use spatial audio for its own sake. Ask how it will improve the storytelling and emotional impact.

Sources and references

https://www.abbeyroad.com/spatial-audio

https://www.abbeyroad.com/for-creators1

http://www.bbc.co.uk/guides/zrn66yc

https://hookeaudio.com/blog/music/best-binaural-albums/

https://hookeaudio.com/what-is-binaural-audio/

https://sonicscoop.com/2016/02/03/sound-all-around-a-3d-audio primer/

https://assets.sennheiser.com/globaldownloads/file/9582/Approaching_Static_Binaural_Mixing.pdf

https://en-de.neumann.com/ku-100

https://training.npr.org/audio/360-audio/

https://blog.audiokinetic.com/working-with-object-based-audio/

https://www.facebook.com/facebookmedia/blog/introducing-spatial-audio-for-360-videos-on-facebook

https://lab.irt.de/demos/object-based-audio/

https://www.blueripplesound.com/head-tracking

https://sonicscoop.com/2018/02/05/audio-mixing-for-vr-the-beginners-guide-to-spatial-audio-3d-sound-and-ambisonics/

http://www.bbc.co.uk/guides/zrn66yc

https://en-us.sennheiser.com/microphone-3d-audio-ambeo-vr-mic

https://www.newsshooter.com/2018/04/10/soundfield-rode-nt-sf1-tetrahedral-array-microphone-360-degree-surround-sound-capture/